You can do as much as you can to modularize your code base, but how much confidence do you have in each of the modules? If one of the E2E tests fails, how would you pinpoint the source of the error? How do you know which module is faulty?You need a lower level of testing that works at the module level to ensure they work as distinct, standalone units—you need unit tests. Likewise, you should test that multiple units can work well together as a larger logical unit; to do this, you need to implement some integration tests.

Picking a testing framework

While there’s only one de facto testing framework for E2E tests for JavaScript (Cucumber), there are several popular testing frameworks for unit and integration tests, namely Jasmine, Mocha, Jest, and AVA.

You’ll be using Mocha for this article, and here’s the rationale behind that decision. As always, there are pros and cons for each choice:

1) Maturity

Jasmine and Mocha have been around for the longest, and for many years were the only two viable testing frameworks for JavaScript and Node. Jest and AVA are the new kids on the block. Generally, the maturity of a library correlates with the number of features and the level of support.

2) Popularity

Generally, the more popular a library is, the larger the community and the higher the likelihood of receiving support when things go awry. In terms of popularity, examine several metrics (correct as of September 7, 2018):

- GitHub stars: Jest (20,187), Mocha (16,165), AVA (14,633), Jasmine (13,816)

- Exposure (percentage of developers who have heard of it): Mocha (90.5%), Jasmine (87.2%), Jest (62.0%), AVA (23.9%)

- Developer satisfaction (percentage of developers who have used the tool and would use it again): Jest (93.7%), Mocha (87.3%), Jasmine (79.6%), AVA (75.0%).

3) Parallelism

Mocha and Jasmine both run tests serially (meaning one after the other), which means they can be quite slow. Instead, AVA and Jest, by default, run unrelated tests in parallel, as separate processes, making tests run faster because one test suite doesn’t have to wait for the preceding one to finish in order to start.

4) Backing

Jasmine is maintained by developers at Pivotal Labs, a software consultancy from San Francisco. Mocha was created by TJ Holowaychuk and is maintained by several developers. Although it is not maintained by a single company, it is backed by larger companies such as Sauce Labs, Segment, and Yahoo!. AVA was started in 2015 by Sindre Sorhus and is maintained by several developers. Jest is developed by Facebook and so has the best backing of all the frameworks.

5) Composability

Jasmine and Jest have different tools bundled into one framework, which is great to get started quickly, but it means that you can’t see how everything fits together. Mocha and AVA, on the other hand, simply run the tests, and you can use other libraries such as Chai, Sinon, and nycfor assertions, mocking, and coverage reports, respectively. Mocha allows you to compose a custom testing stack. By doing this, it allows you to examine each testing tool individually, which is beneficial for your understanding. However, once you understand the intricacies of each testing tool, do try Jest, as it is easier to set up and use.

You can find the necessary code for this article at this github repo.

Installing Mocha

First, install Mocha as a development dependency:

This will install an executable, mocha, at node_modules/mocha/bin/mocha, which you can execute later to run your tests.

Structuring your test files

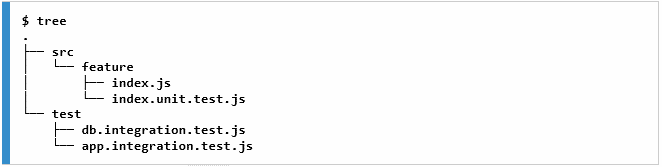

Next, you’ll write your unit tests, but where should you put them? There are generally two approaches:

- Placing all tests for the application in a top-level test/ directory

- Placing the unit tests for a module of code next to the module itself, and using a generic test directory only for application-level integration tests (for example, testing integration with external resources such as databases)

The second approach (as shown in the following example) is better as it keeps each module truly separated in the filesystem:

Furthermore, you’ll use the .test.js extension to indicate that a file contains tests (although using .spec.js is also a common convention). You’ll be even more explicit and specify the type of test in the extension itself; that is, using unit.test.js for unit test, and integration.test.js for integration tests.

Writing your first unit test

Now, write unit tests for the generateValidationErrorMessage function. But first, convert your src/validators/errors/messages.js file into its own directory so that you can group the implementation and test code together in the same directory:

$ mkdir messages

$ mv messages.js messages/index.js

$ touch messages/index.unit.test.js

Next, in index.unit.test.js, import the assert library and your index.js file:

import generateValidationErrorMessage from ‘.’;

Now, you’re ready to write your tests.

Describing the expected behavior

When you installed the mocha npm package, it provided you with the mocha command to execute your tests. When you run mocha, it will inject several functions, including describe and it, as global variables into the test environment. The describe function allows you to group relevant test cases together, and the it function defines the actual test case.

Inside index.unit.tests.js, define your first describe block:

import generateValidationErrorMessage from ‘.’;

describe(‘generateValidationErrorMessage’, function () {

it(‘should return the correct string when error.keyword is "required"’, function () {

const errors = [{

keyword: ‘required’,

dataPath: ‘.test.path’,

params: {

missingProperty: ‘property’,

},

}];

const actualErrorMessage = generateValidationErrorMessage(errors);

const expectedErrorMessage = "The ‘.test.path.property’ field is missing";

assert.equal(actualErrorMessage, expectedErrorMessage);

});

});

Both the describe and it functions accept a string as their first argument, which is used to describe the group/test. The description has no influence on the outcome of the test, and is simply there to provide context for someone reading the tests.

The second argument of the it function is another function where you’d define the assertions for your tests. The function should throw an AssertionError if the test fails; otherwise, Mocha will assume that the test should pass.

In this test, you have created a dummy errors array that mimics the errors array, which is typically generated by Ajv. You then passed the array into the generateValidationErrorMessage function and capture its returned value. Lastly, you compare the actual output with your expected output; if they match, the test should pass; otherwise, it should fail.

Overriding ESLint for test files

The preceding test code should have caused some ESLint errors. This is because you violated three rules:

- func-names: Unexpected unnamed function

- prefer-arrow-callback: Unexpected function expression

- no-undef: describe is not defined

Now fix them before you continue.

Understanding arrow functions in Mocha

If you’d used arrow functions, this would be bound, in your case, to the global context, and you’d have to go back to using file-scope variables to maintain state between steps.

As it turns out, Mocha also uses this to maintain a “context”. However, in Mocha’s vocabulary, a “context” is not used to persist state between steps; rather, a Mocha context provides the following methods, which you can use to control the flow of your tests:

- this.timeout(): To specify how long, in milliseconds, to wait for a test to complete before marking it as failed

- this.slow(): To specify how long, in milliseconds, a test should run for before it is considered “slow”

- this.skip(): To skip/abort a test

- this.retries(): To retry a test a specified number of times

It is also impractical to give names to every test function; therefore, you should disable both the func-names and prefer-arrow-callback rules.

So, how do you disable these rules for your test files? For your E2E tests, you create a new .eslintrc.json and placed it inside the spec/ directory. This would apply those configurations to all files under the spec/ directory. However, your test files are not separated into their own directory but interspersed between all your application code. Therefore, creating a new .eslintrc.json won’t work.

Instead, you can add an overrides property to your top-level .eslintrc.json, which allows you to override rules for files that match the specified file glob(s). Update .eslintrc.json to the following:

"extends": "airbnb-base",

"rules": {

"no-underscore-dangle": "off"

},

"overrides": [

{

"files": ["*.test.js"],

"rules": {

"func-names": "off",

"prefer-arrow-callback": "off"

}

}

]

}

Here, you indicate that files with the extension .test.js should have the func-names and prefer-arrow-callback rules turned off.

Specifying ESLint environments

However, ESLint will still complain that you are violating the no-undef rule. This is because when you invoke the mocha command, it will inject the describe and it functions as global variables. However, ESLint doesn’t know this is happening and warns you against using variables that are not defined inside the module.

You can instruct ESLint to ignore these undefined globals by specifying an environment. An environment defines global variables that are predefined. Update your overrides array entry to the following:

"files": ["*.test.js"],

"env": {

"mocha": true

},

"rules": {

"func-names": "off",

"prefer-arrow-callback": "off"

}

}

Now, ESLint should not complain anymore!

Running your unit tests

To run your test, you’d normally just run npx mocha. However, when you try that here, you’ll get a warning:

Warning: Could not find any test files matching pattern: test

No test files found

This is because, by default, Mocha will try to find a directory named test at the root of the project and run the tests contained inside it. Since you placed your test code next to their corresponding module code, you must inform Mocha of the location of these test files. You can do this by passing a glob matching your test files as the second argument to mocha. Try running the following:

src/validators/users/errors/index.unit.test.js:1

(function (exports, require, module, __filename, __dirname) { import assert from ‘assert’;

^^^^^^

SyntaxError: Unexpected token import

….

You got another error. This error occurs because Mocha is not using Babel to transpile your test code before running it. You can use the –require-module flag to require the @babel/register package with Mocha:

generateValidationErrorMessage

should return the correct string when error.keyword is "required"

1 passing (32ms)

Note the test description passed into describe and it is displayed in the test output.

Running unit tests as an npm script

Typing out the full mocha command each time can be tiresome. Therefore, you should create an npm script just like you did with the E2E tests. Add the following to the scripts object inside your package.json file:

Furthermore, update your existing test npm script to run all your tests (both unit and E2E):

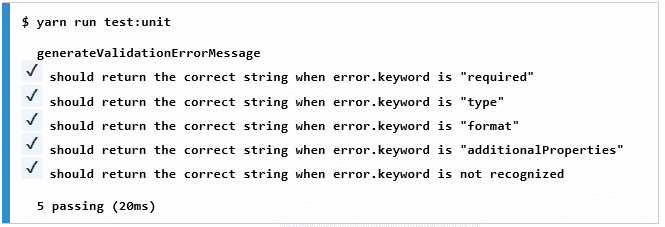

Now, run your unit tests by running yarn run test:unit, and run all your tests with yarn run test. You’ve now completed your first unit test, so commit the changes:

git commit –m "Implement first unit test for generateValidationErrorMessage"

Completing your first unit test suite

You have only covered a single scenario with your first unit test. Therefore, you should write more tests to cover every scenario. Try completing the unit test suite for generateValidationErrorMessage yourself; once you are ready, compare your solution with the following one:

import generateValidationErrorMessage from ‘.’;

describe(‘generateValidationErrorMessage’, function () {

it(‘should return the correct string when error.keyword is "required"’, function () {

const errors = [{

keyword: ‘required’,

dataPath: ‘.test.path’,

params: {

missingProperty: ‘property’,

},

}];

const actualErrorMessage = generateValidationErrorMessage(errors);

const expectedErrorMessage = "The ‘.test.path.property’ field is missing";

assert.equal(actualErrorMessage, expectedErrorMessage);

});

it(‘should return the correct string when error.keyword is "type"’, function () {

const errors = [{

keyword: ‘type’,

dataPath: ‘.test.path’,

params: {

type: ‘string’,

},

}];

const actualErrorMessage = generateValidationErrorMessage(errors);

const expectedErrorMessage = "The ‘.test.path’ field must be of type string";

assert.equal(actualErrorMessage, expectedErrorMessage);

});

it(‘should return the correct string when error.keyword is "format"’, function () {

const errors = [{

keyword: ‘format’,

dataPath: ‘.test.path’,

params: {

format: ’email’,

},

}];

const actualErrorMessage = generateValidationErrorMessage(errors);

const expectedErrorMessage = "The ‘.test.path’ field must be a valid email";

assert.equal(actualErrorMessage, expectedErrorMessage);

});

it(‘should return the correct string when error.keyword is "additionalProperties"’,

function () {

const errors = [{

keyword: ‘additionalProperties’,

dataPath: ‘.test.path’,

params: {

additionalProperty: ’email’,

},

}];

const actualErrorMessage = generateValidationErrorMessage(errors);

const expectedErrorMessage = "The ‘.test.path’ object does not support the field ’email’";

assert.equal(actualErrorMessage, expectedErrorMessage);

});

});

Run the tests again, and note how the tests are grouped under the describe block:

You have now completed the unit tests for generateValidationErrorMessage, so commit it:

git commit –m "Complete unit tests for generateValidationErrorMessage"

Conclusion

If you found this article interesting, you can explore Building Enterprise JavaScript Applications to strengthen your applications by adopting Test-Driven Development (TDD), the OpenAPI Specification, Continuous Integration (CI), and container orchestration. Building Enterprise JavaScript Applications will help you gain the skills needed to build robust, production-ready applications.